It is 2026, and the technological landscape has shifted fundamentally. We are no longer just “writing code”; we are orchestrating complex ecosystems. Artificial Intelligence is integrated into every layer of our stack, Kubernetes has effectively become the operating system of the cloud, and Platform Engineering has replaced traditional DevOps as the standard for infrastructure management.

In this whirlwind of innovation, where speed and reliability are the currency of the industry, one tool has transcended its status as a “nice-to-have” utility to become an absolute non-negotiable necessity for every developer: Docker.

If you are still hesitant about learning containerization, or if you view it as merely an “ops” concern, you are limiting your potential. Here is why Docker is the most critical skill for your software engineering career in 2026.

The “Works on My Machine” Problem is Finally Dead

For decades, the bane of every developer’s existence was the dreaded phrase: “But it works on my machine!”

We have all been there. You spend days building a feature, only to have it crash instantly in the testing environment. You spend hours debugging, only to realize the server had a slightly different version of Node.js, a missing system library, or a different OS patch than your local laptop.

Docker has single-handedly solved this distributed dependency hell. By packaging your application and all its dependencies—libraries, runtimes, system tools, and configurations—into a single, immutable artifact called a container, you ensure consistency. A Docker container runs exactly the same way on your MacBook as it does on your colleague’s Windows machine, the QA team’s Linux server, and the production cluster in the cloud.

In 2026, where deployment cycles happen in minutes rather than weeks, this consistency is not a luxury; it is a requirement. “It works on my machine” is no longer a valid excuse; it’s a relic of the past.

Supercharging Onboarding and Collaboration

Imagine it is your first day at a new job or you are joining a new open-source project. In the pre-Docker era, your first week was often spent reading a dusty “Setup Wiki,” manually installing databases, configuring environment variables, and wrestling with version conflicts.

In 2026, thanks to Docker, onboarding is instantaneous. You clone the repository, run a single command like docker compose up, and the entire architecture springs to life. The backend API, the frontend application, the Redis cache, the PostgreSQL database, and the message broker all spin up in isolated networks, pre-configured and ready to go.

Docker transforms the development environment into code. This means the environment itself is version-controlled. If a team member upgrades the database version, they change a line in the docker-compose.yml file. When you pull the latest code, your environment updates automatically. This reduces friction in collaboration to near zero, allowing teams to focus on logic, not plumbing.

The Enabler of Polyglot & Microservices Architectures

The era of the monolith is largely behind us. Modern applications are composed of loosely coupled microservices, often written in different languages to leverage specific strengths. You might have a high-performance service in Rust, a data-processing worker in Python, and a real-time web server in Node.js.

Managing this “polyglot” stack on a local machine without Docker is a nightmare. You would need to maintain different runtime versions and avoid port conflicts constantly.

Docker abstracts this complexity entirely. It allows you to encapsulate each service in its own container with its own specific environment. The Rust container doesn’t care that the Node container needs a specific version of OpenSSL. They coexist peacefully on the same kernel. This freedom allows developers in 2026 to pick the right tool for the job without worrying about how to install it on the production server.

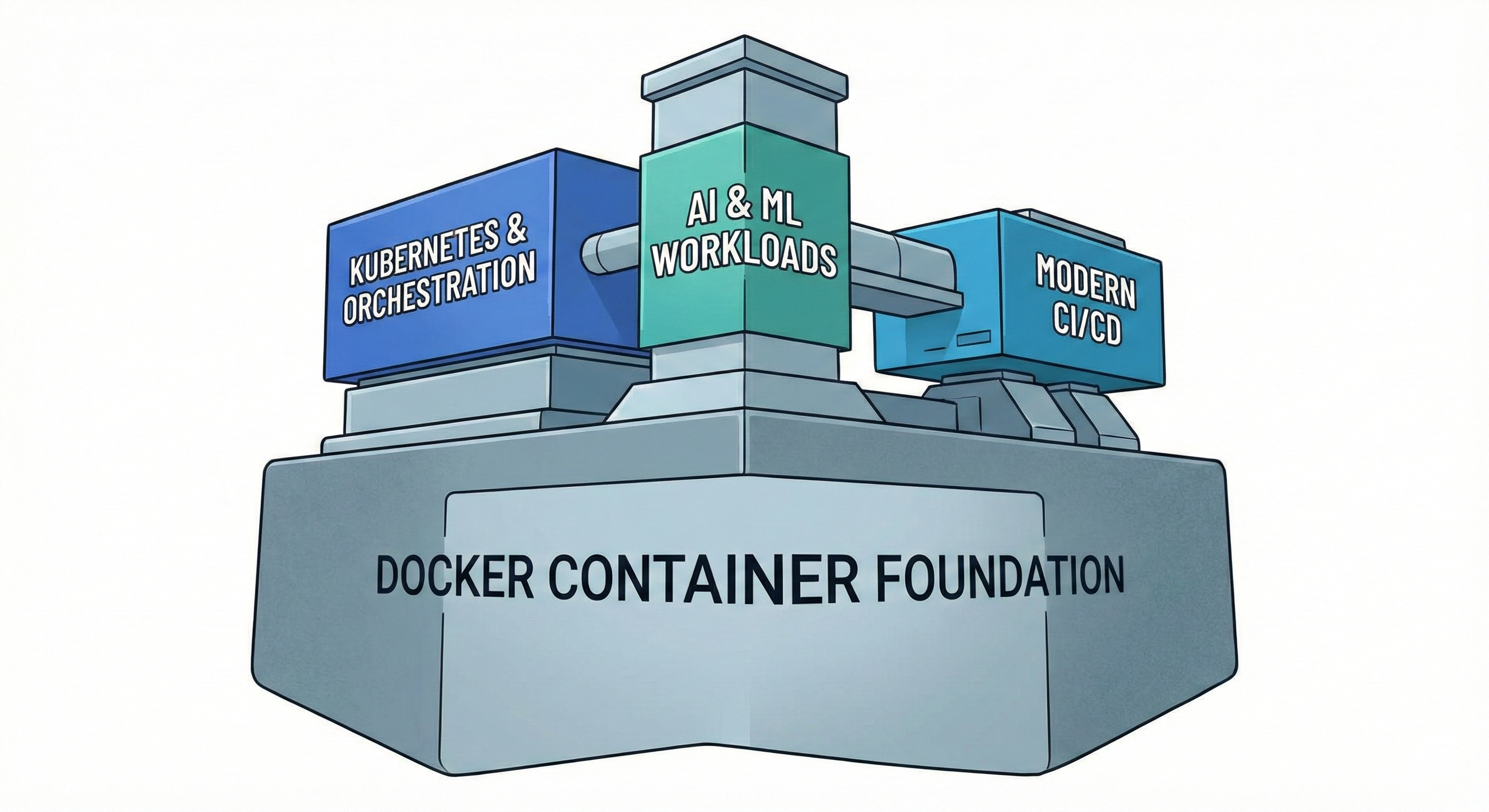

Image: A visual representation of the Docker container as the fundamental foundation for modern software engineering practices, including Kubernetes orchestration, AI & ML workloads, and modern CI/CD pipelines.

The Foundation of the Cloud-Native Stack

You might be thinking, “Isn’t everyone using Kubernetes now?”

Yes, Kubernetes is the dominant force for orchestrating applications at scale. However, you must ask yourself: What does Kubernetes orchestrate? It orchestrates containers.

You cannot effectively understand, debug, or build for Kubernetes without a solid, foundational grasp of Docker. Docker is the building block. If Kubernetes is the conductor of the orchestra, Docker is the instrument. Writing a deployment manifest without understanding how Dockerfiles work is like trying to write a novel without knowing the alphabet.

Furthermore, the rise of “Serverless Containers” (like Google Cloud Run or AWS Fargate) allows developers to deploy Docker containers directly to the cloud without managing any infrastructure at all. If you can containerize your app, you can scale it to millions of users with almost zero operational overhead.

Critical for AI and Machine Learning Engineering

The explosion of AI has made environment management even more complex. AI/ML workloads are notoriously fragile regarding dependencies. A specific version of PyTorch might require a specific CUDA driver, which in turn requires a specific Linux kernel module.

Trying to replicate these environments manually is a recipe for disaster. Docker has become the standard distribution mechanism for AI models. It allows Data Scientists and ML Engineers to package their models with the exact mathematical libraries and system drivers required to run them.

In 2026, “AI Engineering” involves deploying these models as inference APIs. Docker provides the portability required to move a model from a researcher’s powerful GPU workstation to a cloud inference endpoint seamlessly.

Security and “Shift Left”

Finally, security in 2026 is everyone’s responsibility. Docker enables the “Shift Left” security philosophy. Because the environment is defined in code (the Dockerfile), you can scan your infrastructure for vulnerabilities before the code ever leaves your laptop.

Modern CI/CD pipelines automatically scan Docker images for known CVEs (Common Vulnerabilities and Exposures) every time you commit code. This proactive approach ensures that you aren’t deploying a database with a default password or a web server with a known exploit. Learning Docker helps you understand the anatomy of your application’s security surface.

Image: A visual representation of the “supercharged” developer workflow in 2026, where Docker acts as the central engine allowing developers to seamlessly code, orchestrate, and monitor complex distributed systems from a single, high-tech workstation.

Conclusion

In 2026, Docker is no longer a specialized skill reserved for DevOps engineers or System Administrators. It is a fundamental literacy for anyone who writes code, from frontend designers to backend architects to data scientists.

It is the key to consistent environments, the gateway to the cloud-native world, and a massive booster for your personal productivity. It allows you to treat infrastructure as a flexible, programmable resource rather than a rigid barrier.

If you want to stay relevant, build scalable applications, and work effectively in modern, high-velocity teams, learning Docker is not just a recommendation—it is a necessity. The best time to start was yesterday. The second best time is today.